The Creative A/B testing tool automatically analyzes performance to identify any creatives over/under performing relative to the ad group average, based on user settings. The success criteria and settings for creative AB tests (testing the performance of different ad copies) and landing page AB tests are determined at the level of the Marin account.

Note: Creative testing settings do not currently exist in MarinOne, so your tests should still be set up in Marin Search. However, you can still view the results of your creative tests in the grids in MarinOne by following the steps listed below.

What Is Creative Testing?

Creative testing it gives you the confidence and control you need to make smarter decisions about your creatives. It allows you to easily compare the performance of one creative to another, giving you valuable real-world data you can use to improve your campaign strategy.

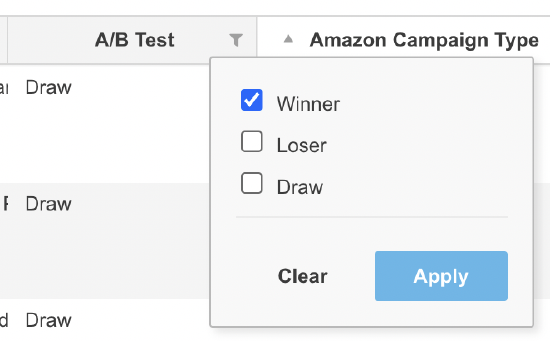

You can use the A/B testing tool to choose from a number of criteria by which to test your creatives. For example, you can compare them by conversions & impressions, clicks & impressions, Return On Investment (ROI), and many more. Once you've chosen your settings, you'll be able to use the A/B Testing filter in the main Creatives grid to check the status of your creatives at any time. You can filter for Winner, Loser, or a Draw. You can also use the View Builder to bring the A/B Test column into the grid and see the per-creative results at-a-glance.

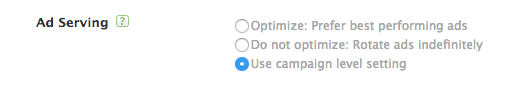

Note that to get the best results from creative testing, we recommend that you have your ads set to Do Not Optimize: Rotate ads indefinitely in your group-level settings. To learn more about ad rotation settings, check out our article Ad Rotation Options with Google.

How The platform Calculates Winners, Losers, Or A Draw

When determining Winners and Losers within an ad group, the platform will check the selected criteria (e.g. Conversions / Impressions) for each creative, then compare the result against the average for the other creatives in the group. If there is a statistical difference between a creative and the group average, that creative will be marked as a Winner or Loser. If there's no statistical difference, it'll be marked as a Draw.

Note: A draw can also mean that there's not yet enough data for the platform to declare a winner; for example, if the thresholds or confidence level haven't been met.

A Note About Confidence Level

It works like this: When comparing a creative against the average of all other creatives in the group, the platform will use 90% Confidence Level to determine if there is a significant difference or not. The platform uses an algorithm known as the Student's T-test to calculate the statistical confidence level between two values (for example, the conversion rate of two creatives).

Best Practices

- If you're testing across multiple campaigns or groups, tag your creatives with dimensions in order to determine aggregate best performers.

- When you have a winner, pause your losing creatives and continue the testing process.

- Your publisher group-level settings should be set to Do Not Optimize: Rotate ads indefinitely.

Note: Any differences between ads will cause Marin to see these ads as distinct for testing purposes. This includes capitalization in headlines.

How To

Analyzing The Results Of Your Creative Testing

Once your creative test has been running for your chosen period of time, it's time to check the results. You can analyze your results in a variety of different ways in Marin Search or MarinOne.

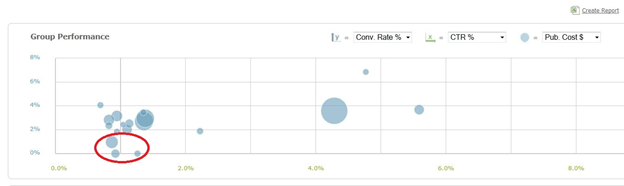

As a starting point, sort your active groups by highest spenders for the month. Bring in a Bubble chart and identify the high-spend groups with the lowest conversion rate (revenue return) and CTR. These are prime starting points for A/B testing.

Use the tool to identify best performing ad copy for these groups. Pause the Losers. Clone and create message variations of Winners.

Additionally, utilize dimensions by tagging new creatives with time stamps to easily track progress. If you’re testing the same copy across multiple groups or campaigns, give them one tag within a dimension to determine aggregated best performers.

Note: At this time, Marin only offers creative testing at the creative level. Testing cannot be performed at the group or campaign level. These settings may, however, be available on the channel themselves (on Google or Microsoft's ad site) and we do offer Dimensions to aggregate your creative-level data in any group you would like.

Creative testing settings do not currently exist in MarinOne, so your tests should still be set up in Marin Search. However, you can still view the results of your creative tests in the grids in MarinOne by following the steps listed below.

- First, navigate to the Ads tab.

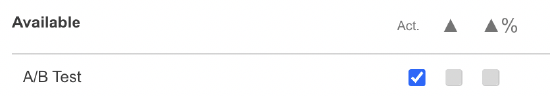

- From there, click on the Column Selector in the top-right above the grid.

- From the Column Selector, locate the A/B Test column and check the corresponding box to add it to the grid.

- From the A/B Test column, use the filters to view Winner, Loser, or Draw.

Best Practices

- If you're testing across muliple campaigns or groups, tag your creatives with dimensions in order to determine aggregate best performers.

- When you have a winner, pause your losing creatives and continue the testing process, always pitting your winning creatives up against new creatives.

- Your publisher group-level ad serving settings should be set to Do Not Optimize: Rotate ads indefinitely. To access your creative rotation settings, click into to the group of your choosing, then click on the settings sub-tab, and scroll down to the Ad Serving setting. From there you can update your Ad Serving settings and click Save.